McCormick Place, Lakeside Center

Sunday, September 25, 2005

9:00 AM - 5:00 PM

McCormick Place, Lakeside Center

Monday, September 26, 2005

9:00 AM - 5:00 PM

McCormick Place, Lakeside Center

Tuesday, September 27, 2005

9:00 AM - 5:00 PM

McCormick Place, Lakeside Center

Wednesday, September 28, 2005

9:00 AM - 5:00 PM

8424

Development of a Three-Dimensional Craniofacial Trauma Surgery Simulator

Introduction: Craniofacial surgery is inherently complex in three dimensions. Current technology, however, only allows for 2D surgical planning on a practical level. We offer a new method for 3D post-traumatic craniofacial surgical planning that has the potential to simulate, in real-time, the soft tissue changes associated with manipulation of the craniofacial skeleton. Our system is unique in its emphasis on user-friendliness and cost containment to foster widespread use in clinical practice.

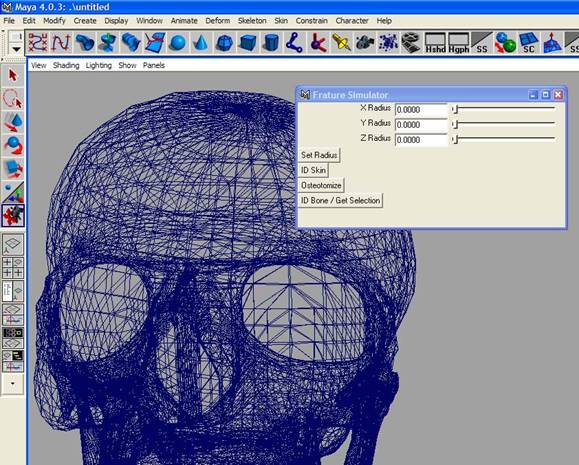

Methods: This system was programmed in MEL (Maya Embedded Language) and uses empirical data for soft tissue results of craniofacial bone movement to predict the soft tissue consequences of skeletal changes. The first stage of the project involved designing and writing the program itself in MEL; we are currently programming an optimized version in C++. As this system is designed as a plug-in for Maya (3D animation and modeling software), it is flexible and can be adapted to base its soft tissue predictions on different databases of soft tissue biomechanics as improved databases are developed (figure 1). The second stage of the project will involve correlation of pre- and post-op CT and laser scans of craniofacial trauma patients to build a superior database of soft tissue biomechanics.

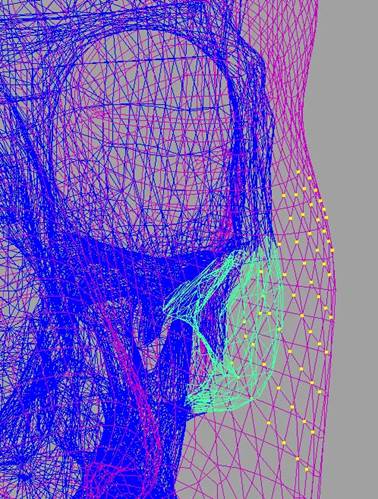

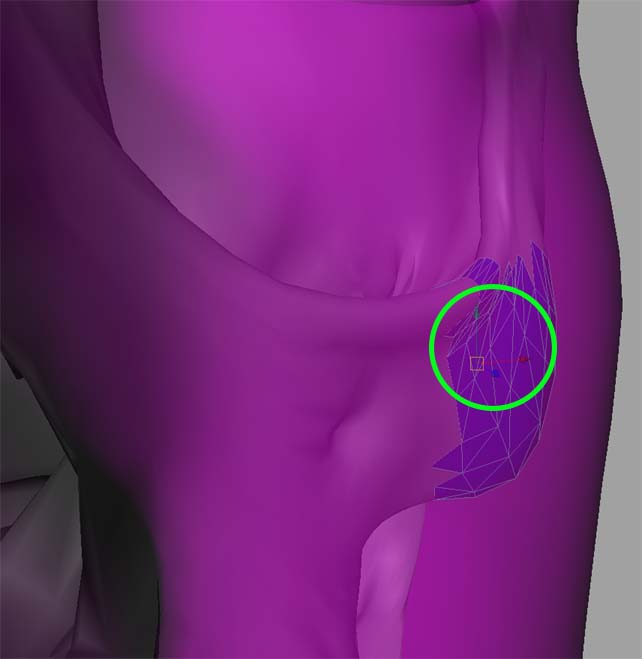

Results: We have successfully completed the first part of the project, the design of a Maya plug-in that is compatible with importation of processed skeletal and skin data from patient CT scans and allows the user to move bone fragments and observe predictions of soft tissue results in 3D based on a user-defined database (figures 2 and 3). We are now positioned to begin the next part of the project, in which we will develop an improved database of craniofacial biomechanics.

Conclusion: We have designed the framework of a 3D virtual reality craniofacial planning system that will allow the user to simulate bone fragment manipulation with real-time prediction of the resultant soft tissue effects. This system allows the simulations to be viewed from any angle and interactively manipulated. Moreover, we have taken care to design our system to be practical for widespread use. This program is a useful educational tool in its present form and is intended, after the next stage of the project, to play a role in post-traumatic surgical planning.

Figure 1: The de-bugging version of the simulator window is shown in the Maya environment, with demo skull data in the background. For testing purposes, we are using the simplified geometry shown in these sample figures. The system is, however, compatible with importing real patient CT scan data. This de-bugging version allows the user to manually input the distances in XYZ space from the osteotomized segment defining the region in which skin will be effected by bone moves. In the final version, this information will be part of a pre-defined biomechanincal model. The “Osteotomize” button allows the user to create bone fragments to test the simulator.

Figure 2: The simulation plug-in has identified the vertices (yellow) on the skin mesh (purple) that will be the focus of soft tissue deformation secondary to movement of the fractured bone fragment (green)- here a portion of the body of the zygoma.

Figure 3: A similar perspective to that shown in Figure 2. Here, shading is activated so surfaces are visible (the bone fragment is blue). Within the green circle three arrows are visible; these comprise the manipulator that the user drags to move the bone fragment in 3D space with real-time responsive changes in the overlying skin, as bounded by the yellow vertices illustrated in Figure 2.

View Synopsis (.doc format, 323.0 kb)